understanding understanding

and updates from Davos and the House of Lords

Greetings all, from Taipei, where we celebrate the lunar new year!

So here we are, barely keeping ahead of events, as the metaphors are being marshalled for deployment before a judge. There is much to remark in the class action filed against Stable Diffusion, Midjourney and DeviantArt, but let me try and recap where I think we are in the batte of metaphors, drawn what I’ve been seeing in social media and the blogs, and starting from the positions taken in the class action complaint.

a little dialogue

Skeptic: The model makes hybrid images from the images it was trained on.

AI Fan: The model has been trained on so many images and words that your characterization no longer makes sense; the models can generalize from words to image.

Skeptic: Oh, I respect the power of the statistical model. But is a kind of prediction machine based on past history, not something that truly generalizes. And anyway, the model couldn’t work without containing highly compressed versions of the images it was trained on.

Fan: How much compression will be enough to satisfy you that something else is going on here? That the model can create images of features in the world based on what it has learned from language and images together?

Skeptic: Stop saying it has learned! the model is just interpolating based on its training data.

Fan: So do you, everytime you try to draw a dot or a cat you are calling on images of all the dogs and cats you’ve seen, in life, from pictures and photos, etc… And by the way, you can’t draw as well as Stable Diffusion…

Skeptic: But I didn’t copy (copyrighted!) images into memory, I looked at them, it’s a very different thing. Also I have touched dogs and cats, loved them, fed them, heard them, been scratched and bitten by them, I understand dogs and cats as complex characters with their own mysterious subjectivities (well, at least cats). I have relationships with them. If I could draw, I would call on all that when making an image.

Fan: Yes, you have a richer set of data to draw upon for your generalizations of dogs and cats, but don’t worry, the models will catch up.

And so it goes… the AI fans are hard to keep down, the field is moving ever more quickly, and this debate has a long history, see Chomsky vs Skinner, etc…

Back to the class action

The complaint takes nearly ten pages to describe the functioning of the Stable Diffusion model, with the conclusion that it is “a 21st-century collage tool”. The key points highlighted in this explanation of the model are that it “interpolates” compressed images in the model “into hybrid images…There is no other source of visual information entering the system.” The claim also continues to say that “Defendants’ AI Image Products contain copies of every image in the set of Training Images and are capable at any time of producing as output a copy of any of the Training Images.”

In the Jan 16 edition of the newsletter you will remember me saying that “the naive criticism that Stable Diffusion is just ‘mashing up images that it has copied’ drives Stable Diffusers crazy, and I think for good reason.” The corresponding claim in the class action complaint is much less naive, but I think it will still drive the Diffusers nuts. Will it feature also as a point of contention if this comes to trial? I imagine so, since if one accepts that the unauthorized copies are “in” the model, then every download of the model, and every use of the model, is making more copies and derivatives.

I also said that the model cannot produce exact copies, but rather can (under certain conditions) produce very similar copies of material it was trained on. I do rather wish that the complaint had nuanced this point just a touch (“at any time?”), but perhaps it will work in the defendants’ favour to explain that the copies the system creates are by definition going to be of lower quality, and will often incorporate other material into their reproduction.1 This could be argued to increase the other harms to name and reputation that the complaint includes.

I did not say that the Stable Diffusion model contains copies of the images it was trained on. It’s not that I disagree, it’s that I’m still trying to understand in more precise detail how this can be said to be so, and how it can be said not to be so.

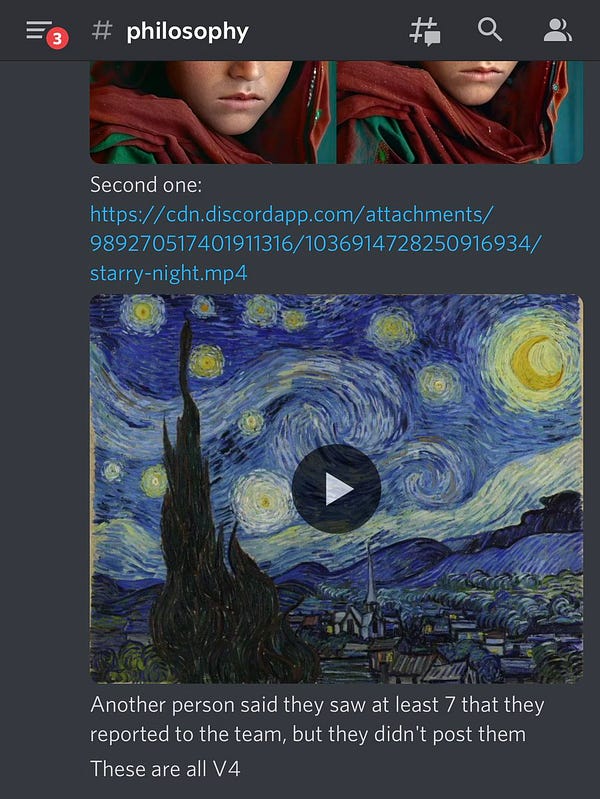

How often does Stable Diffusion produce copies? The Somepalli paper, cited above, looking for very similar images between training data and output found them in Stable Diffusion about 2% of the time. When does a model produce copies? Sometimes it happens when particular, relatively unique combinations of words are very tightly associated with a oft-repeated single image in the training set. In such cases the results might be highly influenced by that single image, to the extent that prompts with the right key words will yield only a slightly varied copy of that image. Karla Ortiz managed to get copies of Steve McCurry’s Afghan Girl from Midjourney V4 (based on Stable Diffusion) for example.

AI defenders will describe this as “overfitting”, a fault that often occurs in models where data is not rich enough (in this case there are presumably not enough different images that have captions saying “Afghan girl” in them). The model does not have enough data to “understand” “Afghan” in relation to “girl” in sufficient generality. Solution? Just add more images! Somepalli et al. warn that copying in Stable Diffusion cannot always be explained by overfitting however, which just reminds us how little we understand how these models really work.

The battle of the metaphors is just getting started. It is fascinating the different energies that are coming to this struggle. For a clear articulation of the argument against anthropomorphism (but speaking mostly of the large language models), see this recent paper from Murray Shanahan, ‘Talking About Large Language Models’.

If we prompt an LLM with “All humans are mortal and Socrates is human therefore”, we are not instructing it to carry out deductive inference. Rather, we are asking it the following question. Given the statistical distribution of words in the public corpus, what words are likely to follow the sequence ‘All humans are mortal and Socrates is human therefore’?” Shanahan, 2022, p. 8

To take up the devil’s advocate position, as I did in the Jan 16th newsletter to an extent, the science of neural networks has been built up over decades by trying to understand how the brain (or a computer) might process information in order to move from raw sensory input to the point of processing symbols, to relating images to concepts-in-the-world. (With the large language models in ascendance, this journey attempts to get there via language.) The architecture of neural networks enables this very elegantly, and the different layers of the network can be said to discriminate higher-orders of organization of data, including patterns we would think of as concepts.

This is a very important point for segments of the AI community, as exemplified in this text from Roman Leventov on lesswrong:

I think the attempts to draw a bright line between AIs “understanding” and “not understanding” language are methodologically confused. There are no bright lines; there is more or less grounding (PS: in real-world interactions), and, of course, models that process only text have as little grounding as possible, but they do understand a concept as soon as they have a feature(s) for it, connected to other semantically related concepts in a sensible way.

Again with the understanding! By feature he means a set of weights in a particular level of the neural network that reliably discriminates between different tokens or images.

But the weakness in this position as far as the class action goes is that all of that impressive marshalling of concepts is being used only to choose a position in latent space, along multiple dimensions, between the different compressed images that have been copied (without permission!) and compressed or abstracted. In copyright terms, the entire latent space is derivative of the images it was trained on, even if it can yield images that look original. And here the spatial metaphor makes its return…

My goodness, we’re still discussing just one point in the complaint. There’s lots of other things to talk about! The claim against DeviantArt in particular is fascinating, along completely different dimensions than the ones covered here. I guess we will save these other points for a future issue.

The Lords are not happy…

The House of Lords communications and digital committee that held hearings in October last year has released its preliminary report, and they are pretty scathing on the UK IP Office’s proposed new TDM exception:

The Intellectual Property Office’s proposed changes to intellectual property law are misguided. They take insufficient account of the potential harm to the creative industries. They were not even defended by the minister in the Department for Digital, Culture, Media and Sport whose portfolio stands to be most affected by the change. Developing AI is important, but it should not be pursued at all costs. (Paragraph 34)

On generative models, the committee sees the need to mobilize, “New technologies are making it easier and cheaper to reproduce and distribute creative works and image likenesses. Timely Government action is needed to prevent such disruption resulting in avoidable harm.”

Meanwhile, in Davos they love GPT-3…

The recently-concluded World Economic Forum was said (by The Guardian anyway), to have had an optimistic mood, shaking off war and inflation and climate disaster in anticipation of all the investment opportunities to come from the green transition and AI. While machine learning may indeed help us with scientific work, the Davos crowd also sees Chat-GPT as a force for good growth, as captured in a paper commissioned by the WEF and written by Nicholas Stern as lead author.

“Foundation models like ChatGPT (large, pre-trained language models that create the ability for anyone to produce text and code) are a major tipping point for AI, with the potential to transform work and how we communicate, unlocking new sources of productivity and growth. A recent PwC study estimates that AI technologies could increase global GDP by $15.7 trillion by 2030 (PwC, 2017). With economic payoffs as large as this, we can expect a rapid scale-up of and increased investment into AI.”

Well, that PwC estimate came long before foundation models… Anyway, machine learning to improve battery technology is definitely a plus, but I’m afraid I’m not quite so optimistic about the impact of GPT-3 on the way we communicate, especially not if the interests of copyright holders are to be sacrificed at its feet!

(For a more detailed discussion of this issue, see Somepalli, Gowthami, Vasu Singla, Micah Goldblum, Jonas Geiping, and Tom Goldstein. ‘Diffusion Art or Digital Forgery? Investigating Data Replication in Diffusion Models’. arXiv, 12 December 2022. https://doi.org/10.48550/arXiv.2212.03860.)