Rightsholders are fighting legal battles over AI model training that happened three or four years ago. Discovery in the US cases has confirmed our worst suspicions, and now we are getting the first rulings. Still, we need to save some energy for understanding what is happening now in AI training and use of copyrighted materials. I’ll give that a go.

Will you be surprised when I tell you that even as AI companies are licensing more, latest trends are extending the use of copyrighted materials further, without licensing, consent, credit or compensation?

Peak Data

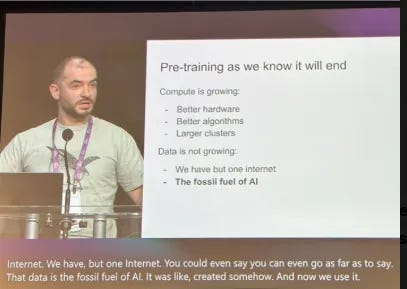

It was long long ago, in December 2024, at the prime meeting for AI research, the NeurIPs conference, that llya Sutskever, onetime OpenAI chief scientist announced in his keynote that we had “reached peak data”.

He compared texts to fossil fuel, a resource that “was like, created somehow. And now we use it.” I guess that makes publishers and writers the dinosaurs... living relics from the Jurassic who “somehow” created content for the benefit of AI model builders. Sacrificing their bodies for future generations…

First of all, is Ilya right? I mean, he should know, but let’s review the picture. Up until 2024 or so, everyone was training on a few key text databases (first BookCorpus and Wikipedia for BERT, then Github, the Enron emails, European and Canadian parliamentary records, Arxiv, etc) and then increasingly, subsets of CommonCrawl, the content reserve made by strip-mining the internet, just to keep up the fossil fuel metaphor. (CommonCrawl, may I remind you, is fully sponsored by Amazon). CommonCrawl text — itself only ever a small proportion of the Internet — was pruned and refined, with some of those curated subsets shared openly.

Why just subsets of CommonCrawl? Because the quality of your models starts to decline if you use too much CommonCrawl, and you do not filter and prune your data ruthlessly. The internet is full of dreck.

What we’re running out of is not “the internet”, but high quality text from the internet. It’s not that “there’s only one internet” as Ilya says, but that “the internet has only so much high quality text”.

Which is why Big Tech has resorted to accessing pirate websites to access copyrighted books and journal articles, etc.

Because, when the reserves run low, what do you do?

You invest in new exploration, in the hopes that you can find things that were overlooked by the early surveyors. This is why all the hyperscalers have launched their own webscrapers, betting they can come up with better search-and-scrape strategies than CommonCrawl (which was never designed for AI training). We’ve seen an explosion of scraping, for general pre-training as well as for all sorts of specialised data collection and index-building.

You become willing to spend more money to extract harder to find reserves. Energy companies spent huge amounts of money to figure out how to drill for oil offshore in deep waters. Some AI companies are now agreeing to licensing deals (for pre-training) with publishers for texts that are not easily scraped from the internet.

You start fracking, extracting every last bit of value from the reserves that you control. For AI text, this is the very useful strategy known as synthetic data.

Let’s review each strategy. Bear with the metaphor a bit longer!

New exploration of old ground

CommonCrawl was not designed to collect data for LLM training, so one thought was to look again for more high quality content online that their scrapers didn’t find. And new content is being created all the time of course. The tech companies have invested in new webcrawlers and scrapers and new techniques and systems for grabbing content. (It’s not just or even mostly for pre-training, companies like Perplexity also need to scrape to feed their “RAG of the internet” systems.)

At the time of writing, ScalePost.AI is tracking 1208 different bots that are scraping your websites. Some of these are from companies who seek to resell scraped data for AI training (for example the Datenbank crawler from Germany). This is why relying on robots.txt as the system for publishers to reserve their rights was never appropriate in the context of EU Law. Not to mention that crawlers don’t always obey robots.txt instructions (I’m looking at you, Bytedance, out of Singapore too...)

Are these new crawlers and new filtering strategies working? They must be to some degree, as the big companies are still investing, and are no longer sharing what is working or not working when they publish their latest model cards.

New territories

Licensing is happening. Wiley famously reported US$ 29m AI licensing revenue in its last reporting period (“and senior management says it’s not enough,” said one Wiley exec when I tried to congratulate her.)

Inquiries for training are picking up. Publishers are getting consent from their authors, as they begin to have more serious conversations with data brokers and model builders.

and the fracking...

I’ve been meaning to write about this for a while, but a recent interview in the invaluable Latent Space podcast, with former Meta FAIR researcher Ari Morcos crystalized some thoughts. Morcos set out what he has learned with his high profile startup company, Datology, focusing on a “good data is all you need” strategy. (Morcos has AI heros like Yann LeCunn, Geoff Hinton and Google’s Chief Scientist Jeff Dean as angel investors... so he attracts some attention.)

First rule: Data quality is the single most important factor in model performance. Again, you think that would be a good reason to get content producers on your side... and work with them. (Like Microsoft might be doing now? watch this space…)

By now the strategies for improving data have gotten more and more elaborate. A key breakthrough is synthetic data.

I first noticed this for LLMs in an important late 2023 Microsoft paper titled “Textbooks are all you need”1, that found that filtering for textbook-like material and rewriting texts in the style of textbooks helped boost model performance. Of course you need a decent language model to do that rewriting at scale.

Synthetic data is not a sign of the robot apocalypse. It’s long been a strategy in good-old-fashioned supervised learning, to help smoothen out sensitive datasets. Fill in a few blanks on the gaussian bell curve, make up some stuff, to make sure your model learns the curve, and doesn’t overfit on the gaps. But you need to be pretty sure that the universe has examples that fit your synthetic data, and that’s it’s just chance or bad luck that your original data set is a little lumpier than it should be.

People have talked about creating synthetic text from scratch, by asking LLMs to write new stuff. But that’s a money loser. The analogy is that you’re burning X BTUs of coal to split water molecules to create 0.5X BTUs of clean hydrogen energy. Also, there’s the risk of model collapse, increasing bias and so on. Model outputs tend to the mean, and we need reality’s distribution!

No, the better strategy for synthetic data is to rephrase texts that you already have. In the podcast Morcos pointed to an early 2024 paper, “Rephrasing the Web A Recipe for Compute and Data-Efficient Language-Modeling”. Exactly what it says on the tin.

A toddler will understand

The prompts used in that paper to rephrase the copyrighted works are pretty simple, unsophisticated to a publisher’s ears, awkward even.

For the following paragraph give me a diverse paraphrase of the same in high quality English language as in sentences on Wikipedia.

For the following paragraph give me a paraphrase of the same using very terse and abstruse language that only an erudite scholar will understand. Replace simple words and phrases with rare and complex ones. Said no publisher ever.

For the following paragraph give me a paraphrase of the same using a very small vocabulary and extremely simple sentences that a toddler will understand.

So all of a sudden I have quadrupled the amount of text I have to use to train my models. Even really small and cheap models are good at rephrasing, so I’m not spending too much on this (yes, irony).

The copyright problem here is that these rephrases are derivatives of the original. The right to create them is a monopoly right of the original author and/or publisher. It’s not that you are extracting the facts and leaving the expression behind (as in old-fashioned text and data mining). You are transposing your expression into a different register as in an adaptation or translation.

The copyright issues with this have not occured to the model builders, at least not in public that I’ve heard. Well, it’s up to rightsholders to raise the issue.

I feel a little sorry for running so far with Ilya’s copyrighted text as fossil fuel metaphor (surely we should at least be solar power!) but I do often think about this learning from Dennis Yi Tenen, in his invaluable book, How Computers Learned to Write: Literary Theory for Robots.

Despite big love for the Tenen quote, I’m going to give the last word to three AI engineers, the hosts and guest of the Latent Space podcast I mentioned. One of the Latent Space hosts is Singaporean swyx (represent!) and they passed two million views the other day, so they have a lot more reach than this humble newsletter. I’ve learned a lot from the pod.

In his interview, Morcos did acknowledge Justice Alsup’s ruling around the pirate status of the Books3 dataset. This clip gives you a great view into the mindset of the Silicon Valley model-builders when it comes to copyright and AI. Transformative, transformative. Publishers, can you just get over it already?

Still a long way to go in bridging the gap. But I remain sanguine we will get there.

Suriya Gunasekar, Yi Zhang and Jyoti Aneja, et al, “Textbooks Are All You Need,” 2023, https://arxiv.org/abs/2306.11644