It has been awhile since the last issue of this newsletter. Let’s say it was a summer break. (We don’t really have summer in Singapore, but you get the idea.)

One of things holding up the newsletter was my trip for the Asian Studies in Asia conference, held in Daegu, Korea. The conference ran through the weekend, so I took last Tuesday off to visit a site that’s been on my list for many years - the Haeinsa temple, up on the valley of Mt Gaya, in the midst of a national park. This important temple hosts the remarkable Tripitaka Koreana, a collection of some 81,350 woodblocks of Buddhist scripture, carved over a couple of decades in first part of the 13th century, so that fresh sets of the scriptures could be printed as needed (yes, after the Mongols had destroyed an earlier set). The blocks moved to this mountain temple some 150 years later, and have survived here since then. The temple is kind of monument to the role of publishing and printing in preserving knowledge for the long term. Still, like their counterparts from the Abrahamic religions, the monks of the Haeinsa are keen to think through AI and what it means for them.

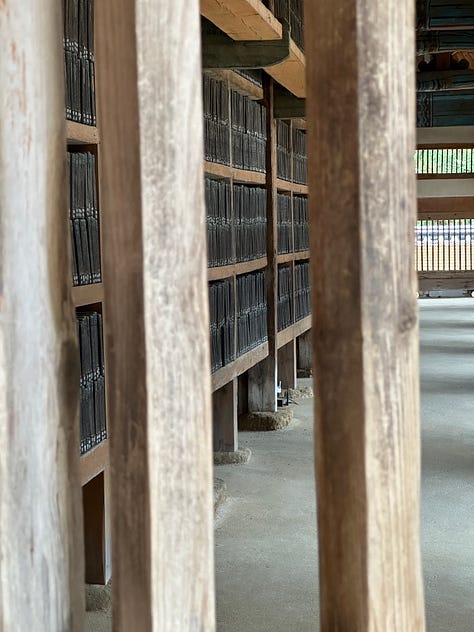

And here are some images of the remarkable buildings that store the woodblocks. So far experts agree that the sensitive design of these buildings, their careful ventilation and the particular composition of the prepared earthen floors, makes for better long-term preservation than climate-controlled and sealed off storage… a lesson in what makes for resilience over long time-lines.

And speaking of timelines, this newsletter has kept me rolling along more or less weekly. That’s why it’s so nice to see someone do a wrap-up of developments that takes a broader perspective. Johan Brandstedt has done that with the AI training data story in his Substack series:

Well worth a read, especially if you have only come to this newsletter and this subject recently. And thanks Johan for the kind words!

Back to work

Back to a backlog of work, and the world of Zoom and etc. I’ve just come out of an excellent webinar prepared by the Italian Publishers Association (the Associazione Italiana Editore), which involved digital humanities scholar Gino Roncaglia of Roma Tre University, professor of book studies Christoph Bläsi from (where else?) Johannes Gutenberg University, and well-known scholar of bias in generative AI, Debora Nozza of Bocconi University, among others. I presented together with Giulia Marangoni of the Association. I learned so much, and the questions were excellent. I was asked what I meant by the “enshittification” of the media ecosystem, and was embarrassed to see I had misspelled Cory Doctorow’s neologism in my presentation! (It should have two ‘t’s. This phenomenon is being more and more noticed, see here and here, from the irreplaceable James Vincent.) It was nice to start with Roncaglia’s informed overview of AI and neural networks over a seventy year period. Bläsi presented views of the publishing value chain, and showed us cases where AI was already being tested and deployed within that chain. I liked his advice on how publishing professionals should engage with AI, roughly noted here:

develop their own unique competence and value proposition, including the sense for quality, in tune with the unique values of the publishing house

experiment with and use the most advanced systems – but mostly to better focus on unique competences (see above), and to understand the functioning and limits of these systems.

engage in appropriate activism, which in Bläsi’s analysis includes pushing for European large language models, better copyright regulation and enforcement (with a sense of proportion), and greater transparency from AI companies.

Nozza presented her well-known work on the ways generative AI output demonstrates bias. I am preparing a post on bias in generative AI and what it means for publishers, but that will take a bit more cooking. More too will come, informed by Marangoni, on the ways European publishers are seeking to actualize the opt-out mechanism that the EU’s Digital Single Market Directive mandates under its TDM exceptions.

In the courts

But before that, an update on copyright (and liability) questions in the (mostly American) courts. Three new cases are on my radar, including our first case of an AI company being sued for defamation, by a right wing radio personality no less.

Filed in early June, what looks like the first defamation case in the US at least, Walter vs OpenAI. See Variety’s story, Radio Host Sues OpenAI for Defamation Over ChatGPT Legal Accusations.

A high profile class action against Open AI, a big one, though it is focused more on privacy than copyright. Also in the Northern District of California. See the Washington Post’s coverage, “ChatGPT maker OpenAI faces class action lawsuit over data to train AI”.

And most recently of all, a copyright class action filed June 28th, against OpenAI by prominent authors Mona Awad and Paul Tremblay. See for example the Los Angeles Times, “Bestselling authors Mona Awad and Paul Tremblay sue OpenAI”. Given that the ongoing Writers Guild of America strike includes concerns about AI, this is an LA story too!

And there have been some updates on earlier cases that we have mentioned before:

Matthew Butterick’s class action against Microsoft/Github/OpenAI over Copilot — DOE 1 et al v. GitHub, Inc. et al. The first motion to dismiss was accepted on some counts, but not on others. Early June saw a revised complaint, with the inevitable motion to dismiss the revised complaint filed end June. The hearing on the second motion to dismiss is set for September 14th. This case does not involve a copyright claim.

The class action against StabilityA/Midjourney/DeviantArt for Stable Diffusion, Andersen et al v. Stability AI Ltd. et al, in the same court, the Northern District of California. The motion to dismiss was filed in April, counterclaims in June, and the hearing is set for mid-July. This case involves a copyright claim. The Motion to Dismiss is filed by Mark Lemley, the Stanford law prof who has appeared in the newsletter a few times, most recently for arguing that LLM training is not likely to be considered fair use by the courts.

The Getty Images civil suit filed in the UK - not sure of the current status of this case.

The Getty Images (US), Inc. v. Stability AI, Inc case, which is being closely watched. The initial motions to dismiss are filed, but a hearing date on those motions has evidently not been set.

Thaler vs Perlmutter et al, challenging USCO’s decision not to grant copyright for AI-generated images, filed June 22, 2022. The USCO has ask for more time, possibly connected to their ongoing process of discussions and fact-finding.

There are many many moving parts in cases like these, related to the number and complexity of complaints, and the complications of filing class actions. It’s really hard to predict how these will play out.

I’m trying my best to remember my hike up to the temple on Mt Gaya, and to keep a long-term perspective…