Great poetry? or lifehack listicles?

A weekly email update on developments in the world of Foundation Models, with a specific focus on the question of how their legal uncertainty will be sorted.

As mentioned in our breaking news special of October 18 , the first serious attempt to use litigation to sort out the copyright status of training data is underway in the US, focusing on the training of the Microsoft-owned Github software autocomplete tool Copilot, which is based on GPT-3. A reminder of the website of the group considering the suit: https://githubcopilotinvestigation.com/

What I didn't include in earlier newsletters are the numbers being circulated on Copilot's usage: According to Github, around 1.2m developers are using the $10/month product and the first comparative studies show (admittedly inhouse) that it enables developers to be 55% more productive. Github CEO recently claimed that about 40% of the code generated by Copilot-enabled developers is from the algorithm.

There has been some news coverage of this, but not a great deal of commentary so far, despite the importance of the issue to the whole edifice of the Foundation Models project. Andres Guadamuz has a cogent commentary here. He sees the impact of this as moving certain of the activities in training the Foundation Models to markets with a text-and-data-mining exception, in particular, Europe and the UK. To which list I would add Singapore, currently home of the world’s broadest TDM exception.

“However, one thing is certain, and it is that other countries have already enacted legislation that declares that training machine learning is legal. The UK has a text and data mining exception to copyright for research purposes since 2014, and the EU has passed the Digital Single Market Directive in 2019 which contains an exception for text and data mining for all purposes as long as the author has not reserved their right.

The practical result of the existence of these provisions is that while litigation is ongoing in the US, most data mining and training operations will move to Europe, and US AI companies could just licence the trained models. Sure, this could potentially be challenged in court in the US, but I think that this would be difficult to enforce, particularly because the training took place in jurisdictions where it is perfectly legal.”

I’m not sure “perfectly” legal is the right word when we still have to understand the interface between commercial and non-commercial exploitation (allowed by the UK exception).

The developer community is certainly noticeing the Butterick lawsuit. While the potential claim is focused on open source software, freely accessible but licensed nonetheless, anecdotal evidence is also starting to emerge that closed code is also being reproduced in Copilot.

Also an issue in China

Contrary to the impressions you might have on reading about piracy and theft of intellectual property in China, it’s the only market I know where the staff of publishing companies must attend mandatory courses on copyright law.

This article from China, helpfully translated by the wonderful ChinaAI newsletter from Jeffrey Ding, looks forward to the demise of Visual China Group (视觉中国), a global player in the image bank business, known in China for its aggressive approach to dealing with small businesses using stock images without permission. It sees AI-created images offered by Chinese companies using Stable Diffusion as providing a solution that ends around Visual China’s dominance. However the article exhibits the classic misunderstanding around “AI-created” images — that they are not amenable to copyright (at least in some jurisdictions) because they are “machine-created”. This is a misunderstanding of how the tools work, both in regard to the creator who prompts the tool and to the many creators whose works were used to train the tool.

Stable Diffusion goes slow…

In a rare move for an AI company, Stable Diffusion announced a delay in the release of a new version of its software. Citing nothing less than “the future of Open Source AI”, Stability AI has announced that it will convene an “open source committee to decide on major issues like cleaning data, NSFW policies and formal guidelines for model release.” Copyright questions not mentioned. They also announced measures to tackle deepfakes, an “abuse of machine learning.”

New applications and products

Meanwhile, the development of AI applications and businesses marches on, with ever increasing speed…

OpenAI CEO Greg Brockman was interviewed by Scale AI CEO Alexandr Wang for the opening of the Scale TransformationX conference, on the long-term trends in the development of the Foundation Models which OpenAI has been so central to. Brockman previewed GPT-x, the forthcoming next generation version by saying that he had prompted it to write poetry for his wife that made her (and him) cry. “I can't do that on my own.” Brockman sees no end yet to the exponential growth in the power of the models, thanks to exponential growth in three different domains converging: in computing power (Moore's law), in machine learning software efficiency and in the size of the datasets we can train models on.

a recent paper describes a method for training GPT-3 to generate longer stories (examples given are up to 7500 words) with consistent premise, characters and setting. Using prompts iteratively allows one to use the AI to create coherent plots and arcs of character development.

GPT-3 powered writing tool Jasper raises US$125M in funding at a $1.5B valuation. From an online review: “You can finish writing high-quality content 2-5X faster! ✍️ ⏰ Jasper can help write your blog articles, social media posts, marketing emails, and more. 🧠 He has an understanding of nearly every niche since Jasper has read most of the public internet! The content Jasper generates does not pull knowledge from any one single source, rather the content is built from all its sources so it’s word-by-word original and plagiarism-free.”

Meta introduced a speech-to-speech translator, that is, a translator trained on spoken language, not text.

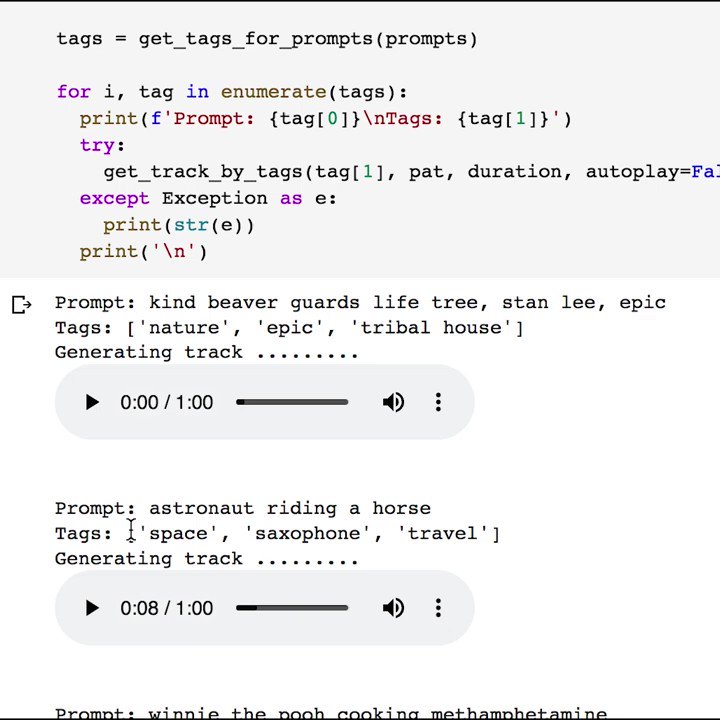

a demonstration of text-to-music generation, which by connection to Stable Diffusion was followed by text-to-music-video generation.

Product Launch: Spellbook - AI-written legal contracts, based on GPT-3

And a fun Twitter thread, again persuading me I need to be using AI to up my Twitter game…