Algorithmic disgorgement, ISIS executions...

And other developments of the week ended 1 October

A weekly email update on developments in the world of large language models (LLMs) and image synthesizers, with a specific focus on the question of how the legal uncertainty around these models will be sorted. For more background, please read here.

The horrible stuff being used to train the latest image generation models…

Vice Media’s Chloe Xiang has been doing strong reporting on the image datasets behind some of the new image synthesizer models, her latest an article entitled AI Is Probably Using Your Images and It's Not Easy to Opt Out a follow up from a Sept 21 piece, ISIS Executions and Non-Consensual Porn Are Powering AI Art.

She engaged with LAION, the team behind the dataset used to train the Stable Diffusion model, and Google’s forthcoming ImaGen model. (DALL-E and Midjourney have not revealed on what data they have trained their models). LAION describes itself as “a non-profit organization with members from all over the world, aiming to make large-scale machine learning models, datasets and related code available to the general public.”

Here is Xiang on the datasets:

“They are, by design, scraping images that they do not own, may not be classified correctly, and that copyright holders and subjects may or may not have given their permission to be used to train AI tools. Motherboard previously reported that the LAION-5B dataset, which has more than 5 billion images, includes photoshopped celebrity porn, hacked and stolen nonconsensual porn, and graphic images of ISIS beheadings. More mundanely, they include living artists' artwork, photographers’ photos, medical imagery, and photos of people who presumably did not believe that their images would suddenly end up as the basis to be trained by an AI. There is currently not a good way to opt out of being included in these datasets, and in the cases of tools like DALL-E and Midjourney, there is no way to know what images have been used to train the tools because they are not open-source.”

It looks like LAION claims legal legitimacy from the fact that their datasets are links only… If you want to use their data to train a model they only send you links, model buildings have to do the copying of the images from across the internet themselves. However they also admit that they did copy the photos in order to calculate “similarity scores” between the images and text used to describe them. I’m still digging into the ways that the image generators are built, so I’ll try for an update on all this in the next couple of weeks.

Reporter Xiang is as or more concerned about issue of bias, privacy violation and the abusive potential of the images used to train these models as the copyright implications and their impact on creators. But she does see the lack of accountability on all aspects of this data as linked. At the end of her story she cites a paper from US Federal Trade Commissioner Rebecca Kelly Slaughter, who is directly concerned with the harms that come from AI, and who has a refreshingly clear approach to illegitimate data:

“The premise is simple: when companies collect data illegally, they should

not be able to profit from either the data or any algorithm developed using it.”

The FTC has already enforced “logarithmic disgorgement”, ie the destruction of software and data, in the case of a company that violated its promises to users on the collection of facial recognition data.

Also retrieved from Xiang’s article, the URL of a service which allows you to check and see if your own image (or your author’s) has been used in LAION training data.

https://haveibeentrained.com

In the style of…

One of the recommendations for creating good prompts for image generators like DALL-E2, Midjourney and Stable Diffusion is that you should brief the generator on the style you would like to achieve. And it’s no surprise that many users of these tools will ask for works in the style of well-known named artists. With a bit of luck and practice, the results are clearly recognizable as being in a particular artist’s style. The Forbes article by Rob Salkowitz cited in last week’s newsletter quotes commercial artist Greg Rutkowski, whose name shows up in hundreds of thousands of prompts captured by prompt search engine libraire.ai.

“As a digital artist, or any artist, in this era, we’re focused on being recognized on the internet. Right now, when you type in my name, you see more work from the AI than work that I have done myself, which is terrifying for me. How long till the AI floods my results and is indistinguishable from my works?”

If you want to lose several hours, try using libraire.ai or a similar service at lexica.art, which search prompts and images created with Stable Diffusion. If you are representing a well-known image creator, maybe you need to. Libraire turned up 52,482 results for the search “Studio Ghibli”.

Furthermore, there is now a marketplace for prompts, where particularly good ones can be sold. Here is the story as reported by Verge, with an interview with a prompt writer who has, this should be no surprise, also launched a GPT-3 based tool to help you generate prompts…

One proposal floating around on the Discords (the chat sites popular with AI users) — pay a royalty to artists whose names are used in prompts. Not a bad suggestion, but it doesn’t solve the question of the rights in the images and texts used to create the tool in the first place.

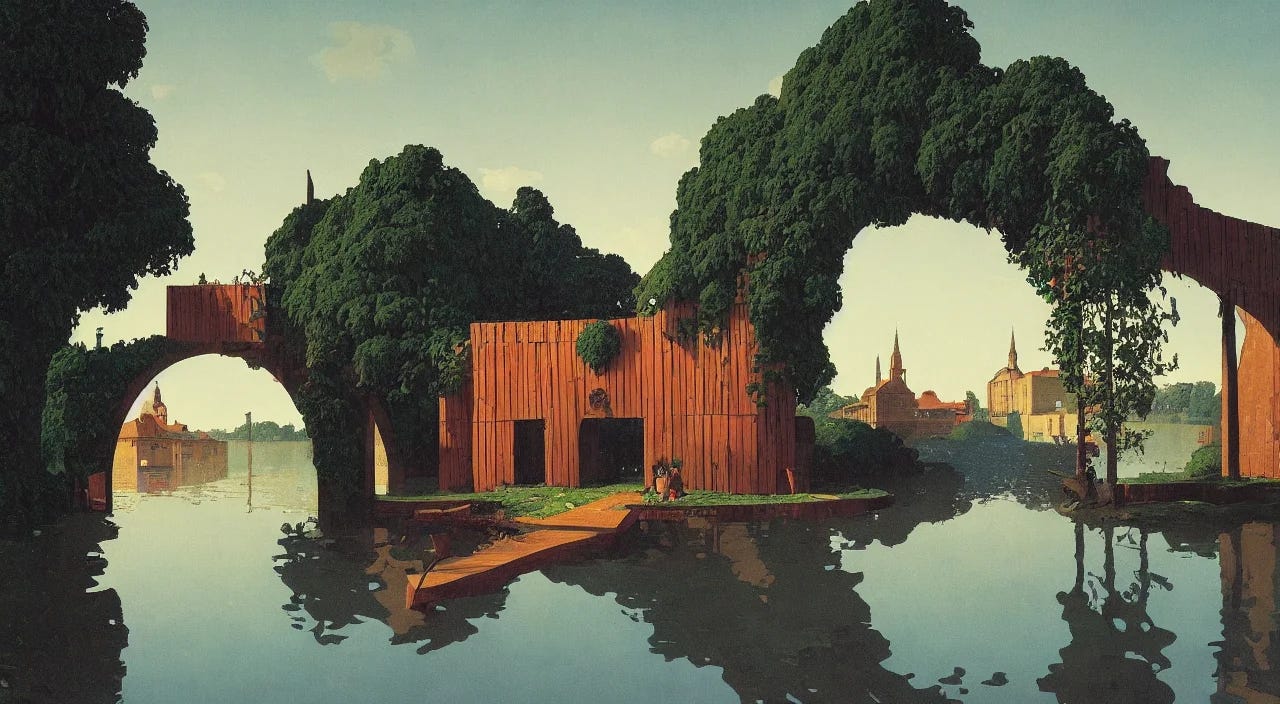

Here’s just one example of an artist-based prompt and the resulting image.

Prompt: “Single flooded simple wooden arch! tower, very coherent and colorful high contrast!! masterpiece by rene magritte simon stalenhag carl spitzweg syd mead norman rockwell edward hopper james gilleard, minimalist, dark shadows, sunny day, hard lighting”

And in a follow up to the Getty Images announcement, what does competitor Shutterstock do?

Nothing, or at least, not much. CEO Paul Hennessy releases an anodyne statement that says remarkably little: “we’re taking steps to look at the impact AI-generated art has on our consumers and contributors.”

James Earl Jones as Darth Vader will live on forever

Over the weekend, Disney announced that they were hiring Ukrainian startup Respeecher to create an AI-generated version of James Earl Jones’ voice, under license, as the character Darth Vader. This sort of speech synthesis technology has been around for some time now, but the licensing of this iconic voice for this iconic character does mark a milestone. Jones is 91, and was thinking of maybe winding down his voice work for Disney. According to the story, the AI-version hearkens more to his original voice work of 45 years ago than to his recent work.

More from the quality papers on the development of image synthesizers

Washington Post: “AI can now create any image in seconds, bringing wonder and danger”

The latest thing AI models can generate: 3d models

Chipmaker nVidia has announced a new model that uses 2D images to generate 3D digital models. However it was trained on “synthetic data”, 2D renders of 3D models, ie apparently without recourse to image databases scraped from the internet. (nVidia also recently announced a new LLM with an innovative training module.)

Then Google announced something similar!

The latest thing AI models can generate: short videos

Meta has shared some early results from a new model that can generate short videos from text prompts.

And all of this just last week…

New Products or Services built on LLMs and other models

Just a few of the products launched or updated on ProductHunt in the last week

AI Grammar Checker - Grammica

Check your writing errors with grammica - https://grammica.com

‘Rewording your article to get unique and plagiarism free content.’

More like a rewriter — hadn’t thought about this risk before: the models can plagiarize without plagiarizing

Peregrine

Expressive Generative Text-to-Speech Model by Play.ht - https://play.ht/ultra-realistic-voices/

Use cases: videos, audiobooks, e-learning, IVR systems

Text to Image Editor

'Photoshop' using only text - https://imgeditor.zmo.ai/

“No photoshop skills needed anymore, transfer any image to what you want simply using text, with zero learning barrier. Just focus on your imagination, AI will do all the rest”

The tech commentators are starting to talk about the new AI tools disaggregating conception from substantiation (or should that be instantiation). The creative act is to come up with the idea, the AI does the rest. At the very least this way of thinking should push out the distracting discussion of whether a synthesized image can be copyrighted. Clearly the writer of the prompts should be able to claim copyright, if the terms of use of the software allow…(a big if, and there’s a whole ‘nother question for a future newsletter…)

Quazel

Get conversational language practice by talking to an AI - https://talk.quazel.com

“In unscripted and dynamic conversations, learners can respond with whatever comes to mind just like you would in real-life conversations.”